So tomorrow is my generals exam (the title’s a bit misleading: I’m actually going to be presenting research I’ve done so my committee can decide if I’m ready to start work on my dissertation–fingers crossed!). I thought it might be interesting to discuss some of the research I’m going to be presenting in a less formal setting first, though. It’s not at the same level of general interest as the Twitter research I discussed a couple weeks ago, but it’s still kind of a cool project. (If I do say so myself.)

Basically, I wanted to know whether there are carryover effects for some of the mostly commonly-used linguistics tasks. A carryover effect is when you do something and whatever it was you were doing continues to affect you after you’re done. This comes up a lot when you want to test multiple things on the same person.

An example might help here. So let’s say you’re testing two new malaria treatments to see which one works best. You find some malaria patients, they agree to be in your study, and you give them treatment A and record thier results. Afterwards, you give them treatment B and again record their results. But if it turns out that treatment A cures Malaria (yay!) it’s going to look like treatment B isn’t doing anything, even if it is helpful, because everyone’s been cured of Malaria. So thier behavior in the second condition (treatment B) is affected by thier participation in the first condition (treatment A): the effects of treatment A have carried over.

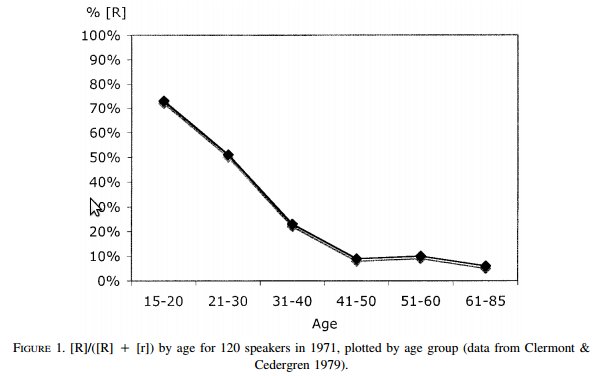

There are a couple of ways around this. The easiest one is to split your group of participants in half and give half of them A first and half of them B first. However, a lot of times when people are using multiple linguistic tasks in the same experiment, then won’t do that. Why? Because one of the things that linguists–especially sociolinguists–want to control for is speech style. And there’s a popular idea in sociolinguistics that you can make someone talk more formally, but it’s really hard to make them talk less formally. So you tend to end up with a fixed task order going from informal tasks to more formal tasks.

So, we have two separate ideas here:

- The idea that one task can affect the next, and so we need to change task order to control for that

- The idea that you can only go from less formal speech to more formal speech, so you need to not change task order to control for that

So what’s a poor linguist to do? Balance task order to prevent carryover effects but risk not getting the informal speech they’re interested in? Or keep task order fixed to get informal and formal speech but at the risk of carryover effects? Part of the problem is that, even though they’re really well-studied in other fields like psychology, sociology or medicine, carryover effects haven’t really been studied in linguistics before. As a result, we don’t know how bad they are–or aren’t!

Which is where my research comes in. I wanted to see if there were carryover effects and what they might look like. To do this, I had people come into the lab and do a memory game that involved saying the names of weird-looking things called Fribbles aloud. No, not the milkshakes, one of the little purple guys below (although I could definitely go for a milkshake right now). Then I had them do one linguistic elicitation tasks (reading a passage, doing an interview, reading a list of words or, to control for the effects of just sitting there for a bit, an arithmetic task). Then I had them repeat the Fribble game. Finally, I compared a bunch of measures from speech I recorded during the two Fribble games to see if there was any differences.

What did I find? Well, first, I found the same thing a lot of other people have found: people tend to talk while doing different things. (If I hadn’t found that, then it would be pretty good evidence that I’d done something wrong when designing my experiment.) But the really exciting thing is that I found, for some specific measures, there weren’t any carryover effects. I didn’t find any carryover effects for speech speed, loudness or any changes in pitch. So if you’re looking at those things you can safely reorder your experiments to help avoid other effects, like fatigue.

But I did find that something a little more interesting was happening with the way people were saying their vowels. I’m not 100% sure what’s going on with that yet. The Fribble names were funny made-up words (like “Kack” and “Dut”) and I’m a little worried that what I’m seeing may be a result of that weirdness… I need to do some more experiments to be sure.

Still, it’s pretty exciting to find that there are some things it looks like you don’t need to worry about carryover effects for. That means that, for those things, you can have a static order to maintain the style continuum and it doesn’t matter. Or, if you’re worried that people might change what they’re doing as they get bored or tired, you can switch the order around to avoid having that affect your data.

![Jay-Z: By chickswithguns [CC-BY-SA-2.0 (http://creativecommons.org/licenses/by-sa/2.0)], via Wikimedia Commons Shakespeare: By It may be by a painter called John Taylor who was an important member of the Painter-Stainers' Company.[1] (Official gallery link) [Public domain], via Wikimedia Commons](https://makingnoiseandhearingthings.com/wp-content/uploads/2012/09/untitled.png?w=640&h=405)